ASO AB Testing: Enhancing App Store Performance

Nowadays, ASO AB testing (App Store Optimization A/B testing) is a cornerstone activity. Marketers increasingly recognize the importance of AB testing ASO strategies for optimizing app performance in various marketplaces, including iOS and Android.

Table of Contents

- What Is AB Testing?

- Define, Setup, Test, Learn and Repeat: Why Try A/B Testing?

- How To Do AB Testing on Apps?

- What About A/B Testing in ASO?

- ASO A/B Testing: 4 Ideas for Implementation

- Understanding ASO A/B Testing and Results

Moreover, growth—especially in a performance context- results from experimentation and innovation. But how can we experience any of these without testing new ideas? However, trying new ideas without tracking results and effects on the bottom line is impossible in a data-driven world. And that’s how AB testing helps us establish a systematic and data-driven decision-making process.

What Is AB Testing?

AB testing is split testing that refers to a randomized experimentation process within two versions of a variant. This method is particularly effective in both iOS AB testing and app store AB testing environments. It is a useful approach that allows marketers to gather the right data when developing, launching, or growing a mobile app.

In this article, we want to look at how our team of experts at REPLUG has applied this process to App Store Optimization activities and how our partners have benefited from this approach.

In ASO, more specifically, AB testing refers to the process of testing different versions of one element (textual or visual) of the Store Listing to see which one performs best. In addition, performance (in this case) is defined as the change (positive or negative) in the conversion rate of our Store Page.

Before jumping into more details, it is crucial to understand why ASO A/B testing is important in a data-driven context.

Related: ASO vs SEO: Discover the Differences and Similarities

Define, Setup, Test, Learn and Repeat: Why Try AB Testing?

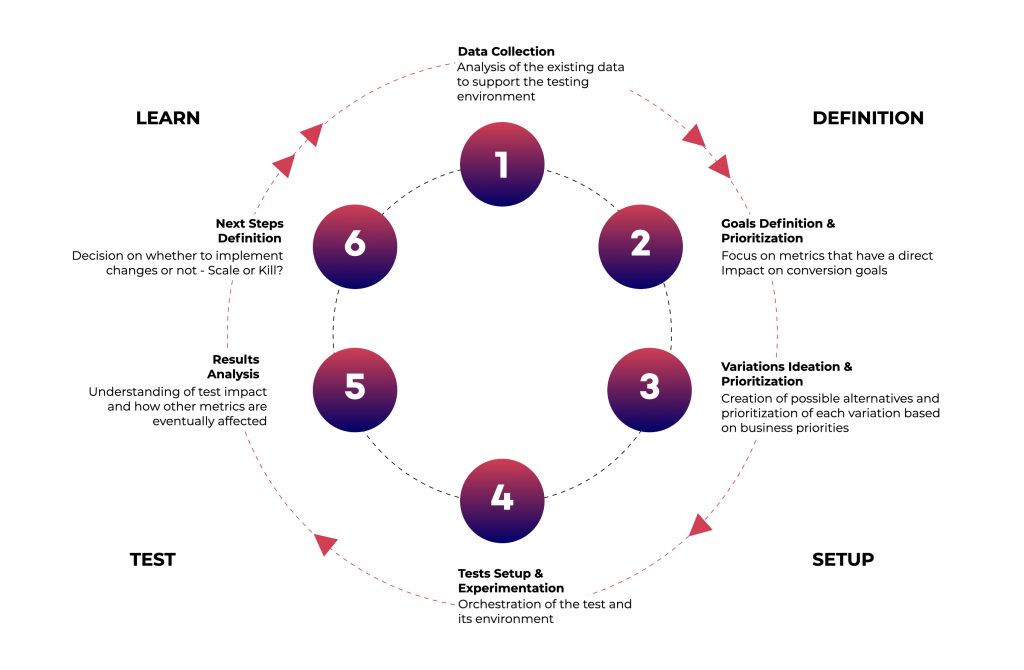

Embarking on the journey of ASO A/B testing is more than just a test; it’s a cycle of continuous improvement. It starts with defining a hypothesis, setting up the test, analyzing results, learning from the outcomes, and repeating the process. This cycle is crucial in both iOS AB testing and app store AB testing for achieving sustainable growth.

As we said, testing and comparing changes to the user experience before actually implementing them will help us improve the performance of our mobile apps and user acquisition efforts.

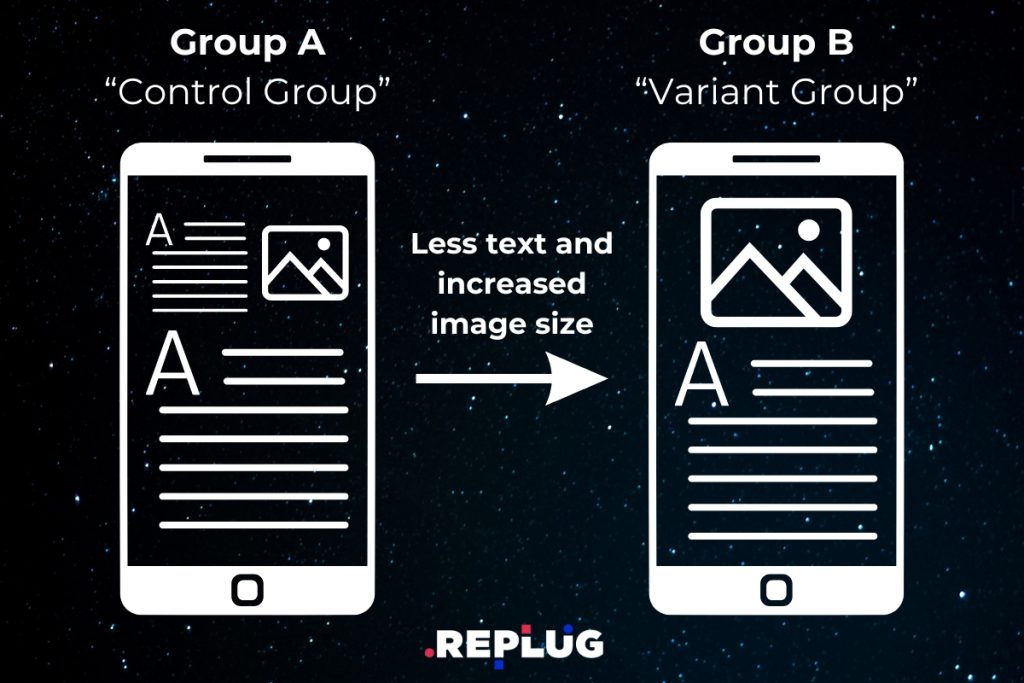

The AB testing process is relatively straightforward—we take a group of users and divide them into two groups (called “variants”), which will be subject to different experiences according to their group.

The experience is promoted in the following ways:

- Group A: it has a standard experience, and that’s why it is called a “control group”

- Group B: it has the alternative experience, and that’s why it is called the “variant group”

What is more, the period of time of the test is decided beforehand or until we gather enough statistically significant data to compare results and establish a winner.

The hypothesis we want to implement through an AB test might have a positive, negative, or no effect at all, but that shouldn’t stop us from testing. In addition, any learning we gather through AB testing is good learning that will help us move a step forward.

In performance marketing, we can test almost everything we want. In our experience, a positive change may be triggered by a slightly different user experience—like a call-to-action (CTA) on an ad, something related to design (the color of the checkout button), or even the registration process.

Related: Google Play and App Store Screenshot Sizes: 2023 and 2024 Guide

How to Do AB Testing on Apps?

A/B testing for mobile apps involves splitting your app’s audience into two or more groups, exposing each group to a different version of the variable, and then analyzing the data to see how the variable affects user behavior. Here’s a brief guide:

- Define the Objective: Clearly identify what you want to test (e.g., a feature, design element, or user flow) and what metric you’re looking to improve (like conversion rates, engagement, or retention).

- Create Variants: Develop the different versions of the element you’re testing. Ensure that these variants are distinct enough to measure the impact effectively.

- Segment Your Audience: Divide your app’s users into groups. Each group should be exposed to only one variant to ensure the integrity of the test.

- Implement the Test: Roll out the variants to your segmented audience, ideally using an A/B testing tool that can track user interactions and gather data.

- Analyze Results: After a significant amount of data has been collected, analyze the results to see which variant performed better based on your defined objective.

- Implement Learnings: Use the insights gained from the test to make informed decisions about app improvements or feature implementations.

Additional Tips on AB Testing

It is fundamental to keep in mind that:

- Our goals and hypotheses should be established before doing the test to have a more precise opinion of what we want to achieve

- The user sample size should be equal.

To sum it up, here is the AB testing visualization framework we implement at REPLUG:

When thinking about implementing this process in our strategy, there are a few more things to keep in mind:

- A/B testing can test the change of a single variable or one of many

- It is recommended to test one element at a time

- If we decide to test multiple variables at the same time, identifying the determinant factor might be too complicated

- A/B testing is valuable because various audiences may behave differently, giving us valuable insights into diverse subsets of the audience

- Assumptions of the AB testing environment are critical to the success of the process.

What About AB Testing in ASO?

After understanding how the A/B testing process works and its critical role in the performance marketing area, it’s time to focus on its role in the App Store Optimization process.

ASO A/B testing can help us improve two main factors:

- Conversion rate

- Discoverability

More specifically, by applying this iterative process to our ASO strategy, we can identify improvements on specific elements of our Store Listings, both textual and visual, for example:

- Icon

- Screenshots

- Video preview

- Title

- Subtitle or short description

ASO A/B Testing: 4 Ideas for Implementation

Depending on the testing environment, there are several alternatives to performing A/B testing when talking about app store optimization. Here below, we take a look at the four most common ways we have experimented with our partners:

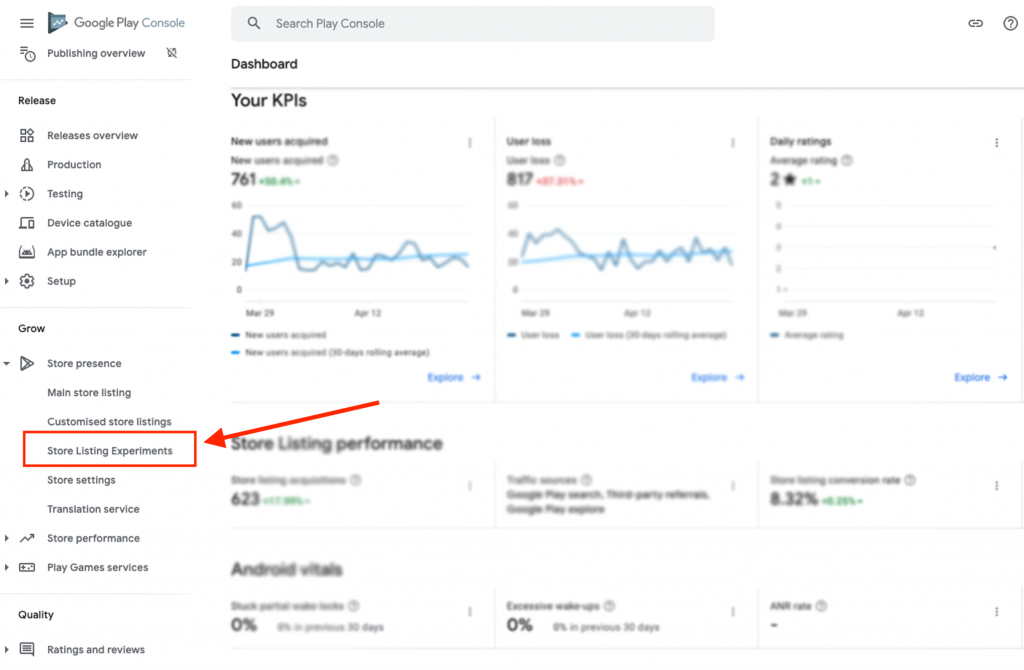

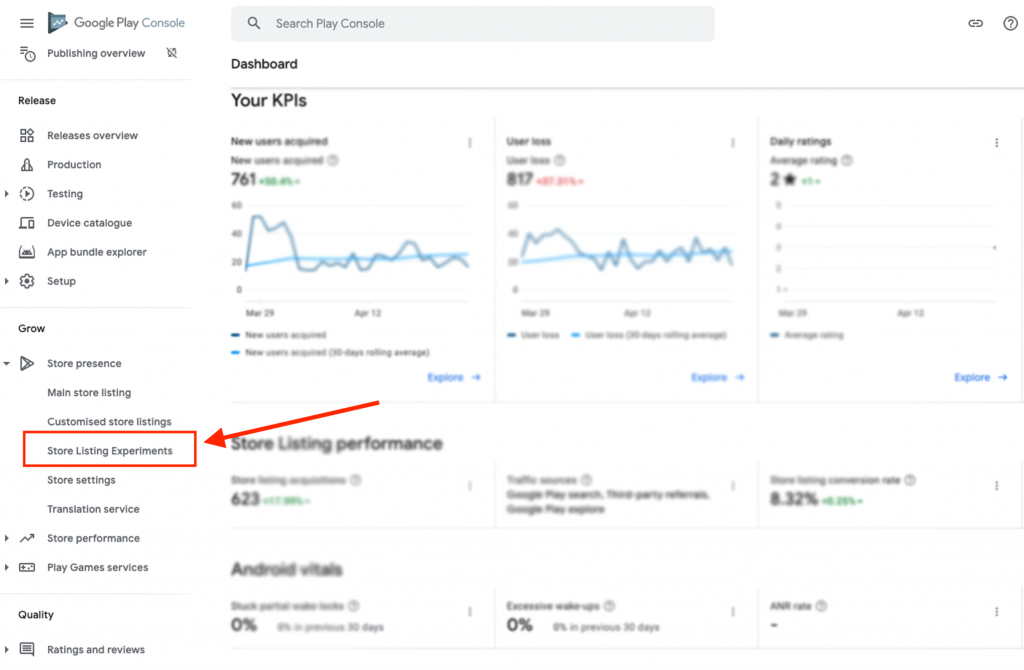

1. Google Play Experiment

This is the easiest, perhaps the fastest, and cheapest way for us to perform A/B testing on our Store Listing. It is implemented directly in the Google Play Console and is relatively simple to use. Despite the obvious advantages, we need to consider the following drawbacks:

- There’s no customization on the elements to be tested

- Android users don’t necessarily behave in the same way (or respond to the same stimuli) as iOS users.

2. Third Party Platforms

If we want to experiment for our iOS users, we might need to rely on 3rd party tools, such as Store Maven and SplitMetrics. These tools basically mimic the Store Listings on a web page to study how the users interact with the variant of the original. Although these tools offer greater flexibility, they come at a cost that does not always justify the benefits.

3. Apple Search Ads’ Creatives Test

Although this is a “hack,” it is still a valuable alternative and way to implement app SEO A/B testing in the iOS environment. Moreover, it allows us to test organic search traffic in our Store Listing’s creative sets. However, the main drawback of this method is that there’s little flexibility.

4. Country Split

This is a simple and free-to-implement ASO AB testing idea. If we are active in more than one country, we can A/B test different variants in different countries. Although it allows us to operate in the Store environment, there are some significant drawbacks.

For example, the audience in a specific country can react quite differently because of cultural reasons rather than preferences, which cannot be universally applied.

Understanding ASO A/B Testing and Results

Generally speaking, in an A/B testing environment, if one variable is better than the other, you have a winner, and you should replace the losing variant. Moreover, if neither is statistically better, then the element you tested may not be as important as you think or result in a statistically significant improvement.

Although understanding when one variable is better than another is not necessarily complicated, we need to track the results of the different tests—to ensure that the learnings we implement are significant over time. As a matter of fact, our hypothesis might be linked to different tests that need to be tracked, understood, and eventually implemented.

ASO A/B testing can greatly improve the conversion rates of our store listings. It is an iterative approach to growth that needs to take a leading place in our strategy. At REPLUG, we implement this process methodically for our partners to challenge the status quo and create a growth mindset.

Are you looking for the right partner to scale your app? Get in contact with our experts and learn how we have helped multiple partners succeed.

FAQ

What Is AB Testing in Android?

A/B testing can assist you in determining the most successful phrasing and messaging variables for attracting users to your app. Securely introduce new features. Only launch a new feature after ensuring it achieves your objectives with a smaller group of consumers.

What Is AB Testing in Mobile App?

Testing different variants of a particular factor, such as design or copy, is known as A/B testing for mobile applications. This testing method involves dividing an audience into two or more separate groups, introducing each group to a distinct version of the variable, and assessing how the variable impacts user behavior.

Why Is AB Testing Important in ASO?

A/B testing enables you to make data-driven choices in order to determine the ASO tactics that will boost the conversion rate of your app. Furthermore, A/B testing allows you to monitor how the traffic you obtain behaves.

How to Do AB Testing in iOS?

Some iOS A/B testing best practices include using fewer elements to keep users’ attention, focusing on a CTA, immersing users in an interactive app experience, aiming for user flow, and more.

What is ASO Marketing?

ASO Marketing enhances app visibility in app stores by optimizing elements like the title, keywords, and descriptions to increase downloads and engagement.

Does Apple Do AB Testing?

Apple doesn’t provide built-in A/B testing in the App Store. Developers use Apple Search Ads or third-party tools for A/B testing on iOS.

What is AB Testing in ASO?

A/B testing in ASO compares different versions of an app’s store listing to identify which elements improve visibility and conversion rates.

Originally published on April 27, 2021. Updated on November 20, 2023.

Comments